28th April 2021. Bias | Geoengineering

Algorithms are coded bias; geo-engineering is geo-politics

Welcome to Just Two Things, which I try to publish daily, five days a week. Some links may also appear on my blog from time to time. Links to the main articles are in cross-heads as well as the story.

#1: Algorithms are coded bias

The notion that algorithms are a source of social disadvantage because they encode existing biases into technology systems is rapidly crossing over into the political mainstream.

The documentary Coded Bias, now on Netflix, is more proof of this. The film has been made by activist director Shalina Kantayya. I haven’t seen it yet, but the South African magazine Daily Maverick has a recent review article.

(Source: Netflix)

The film is bookended by Joy Buolamwini, who is now known as the founder of the Algorithmic Justice League. Her journey into this world started at MIT:

she was making an art-science project that could detect faces in a mirror and project other faces and images over them. But the detection software seemed unable to detect black faces. Buolamwini’s curiosity piqued, she went about researching the data set images used to teach face detection algorithms to see, and what she discovered was that there were significantly fewer black faces in these data sets.

Of course there were. But when these types of software are deployed by police forces, the outcome is the misidentification of black people as suspects: “At one point we see four undercover London policemen stop and question a 14-year-old black boy in school uniform because his face matched with their criminal database.”

The racist applications of algorithms are well known now, I think, but there’s been less discussion of how they act to reinforce the status quo.

Imagine an algorithm that is purportedly designed to evaluate the highest performing demographic in a company to determine what kind of person to hire. It would only be able to “learn” from employees who have already worked at that company... This is exactly what happened at Amazon when they tried to use an algorithm to assess resumes of potential employees. Because the company was dominated by men, the highest achieving employees were – you guessed it – men. So the algorithm learned to reject any resume from a female applicant.

But then again: the tech campaigner Cathy O’Neill defines an algorithm as “using historical information to make a prediction about the future.” The problem is built into the structure.

One of the features of this whole area is that while only 14% of AI researchers are women, they are almost the only voices heard making these critiques. John Naughton noted this point in a recent Observer/Guardian column. It’s not chance that the two people who left Google recently over an article on AI ethics are both women. Almost all the interviewees in Coded Bias are women.

But then again: as Sinclair Lewis once said, “It is difficult to get a man to understand something, when his salary depends upon his not understanding it.” Even the apparently reformed tech bros who pop up in programmes about the issues caused by the growth of pervasive digital systems seems to have problems seeing this as a systemic issue.

It is striking how effective these women critics have been. In particular they have managed to pull the issue into the public sphere, so that figures who’re not associated with technology research have joined the fray. One more piece of evidence of this: Daron Acemoglu, better known for his research on states, power, and propsperity, has a long, long piece in Boston Review on the need for policy responses to shape the application of AI:

The direction of AI development is not preordained. It can be altered to increase human productivity, create jobs and shared prosperity, and protect and bolster democratic freedoms—if we modify our approach. In order to redirect AI research toward a more productive path, we need to look at AI funding and regulation, the norms and priorities of AI researchers, and the societal oversight guiding these technologies and their applications.

The trailer for Coded Bias is here:

#2: Geoengineering is one big governance headache

I touched here briefly on some of the issues that will emerge if we decide that the only possible way to deal with global warming is to start messing with the atmosphere. Since then there’s been a piece by Jocelyn Timperley at China Dialogue that goes into the issue in some detail. The geopolitical ramifications could be enormous, and there is currently next-to-no governance.

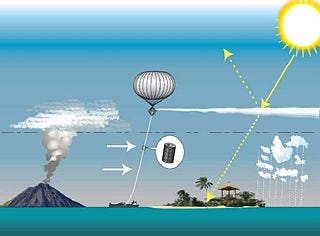

(Balloon-based aerosol injects sulphate particles into the atmosphere. Image: Hugh Hunt, CC, via Wikipedia)

She defines solar geoengineering as “a range of hypothetical techniques capable of increasing the reflection of sunlight before it warms the Earth.”

There are a couple of geoengineering proposals being worked on, at early stages.

Stratospheric aerosol injection, the most prominent proposal, aims to mimic the effects of a volcano by injecting sulphate particles into the stratosphere to reflect some incoming sunlight. Marine cloud brightening, another technique, aims to increase the reflectivity of marine clouds by introducing more cloud-forming particles. Neither technology has yet been proven to work.

Harvard University was hoping to try a small scale test of the first of these, but has lost its space partner, the Swedish Space Corporation, after civil society protests in Sweden. The Australian government is considering the second as a way to protect the Great Barrier Reef.

Timperley runs through the risks quickly: solar re-engineering doesn’t address all of the effects of warming (e.g. ocean acidification is not slowed by it); it could affect both food production and the hydrological cycle in unanticipated ways; there’s a ‘moral hazard’ argument, in that a focus on geoengineering may reduce our willingness to reduce emissions even though we can; there’s a risk of “termination shock”—once you start, you have to keep the programmes running to make them effective.

But the biggest issue is likely to be geopolitical:

States could react aggressively to other countries carrying out solar geoengineering without an international consensus. A country could in theory unilaterally decide to deploy it, as Kim Stanley Robinson portrays India doing, after a catastrophic heatwave, in his recent novel The Ministry of The Future.

To the extent that any governance exists at the moment, it goes back to 2010, when the Convention on Biological Diversity asked states to refrain from deploying solar geoengineering that might affect biodiversity.

Geoengineering researchers have tried to create a framework that could act as the basis for governance. The five Oxford Principles say that geoengineering should be regulated as a public good; there should be public participation; geoengineering research should be openly published; impacts should be assessed openly; and governance should be in place before any deployment.

Progress on an actual governance body is slow, though. It probably doesn’t help if it gets attached to an existing body, but creating a new body with extensive powers for example to stop all experiments) could create conflicts with powerful national interests such as the USA and China.

So it’s possible that the first steps will involve a rather more limited approach, in which, say, the UN sets up a body to review the scientific research and authorise limited experiments. This also lets the countries of the Global South have a voice in the process, since they have most to lose. And we’ve barely started on the question of damage and potential liabilities yet.

j2t#086

If you are enjoying Just Two Things, please do send it on to a friend or colleague.