4 August 2023. Games | AI

What if wargames weren’t about making war? // What AI needs to learn from autonomous vehicles. [#483]

Welcome to Just Two Things, which I try to publish three days a week. Some links may also appear on my blog from time to time. Links to the main articles are in cross-heads as well as the story. A reminder that if you don’t see Just Two Things in your inbox, it might have been routed to your spam filter. Comments are open. And: have a good weekend!

1: What if wargames weren’t about making war?

What if wargames weren’t just simulated versions of forms of military conflict? This is an interesting question asked by Mary Flanagan in a recent article in The MIT Press Reader. The article is based on a chapter she has in a recent book, Zones of Control: Perspectives on Wargaming.

Flanagan is a professor at Dartmouth College and has run for the past 20 years a games research laboratory, Tiltfactor. She starts the article by admitting to her childhood fascination with wargames. Her cousin, in the US Marine Corps, would come home and describe the training games his platoon got involved in.

Much later, a friend described a wargame she had been involved in at a nuclear plant that effectively simulated defending a nuclear plant against an invading army:

The simulation planners did not think to model the situation using lone warrior terrorists, differently trained teams, or individuals with something to prove — instead, the simulation focused on a massive invading army, complete with tanks and even a front line.

So, wargames are speculative fictions, and they are fictions that are often driven by powerful mental models. The article essentially suggests that if we design games around different mental models we may be able to change behaviour in the real world:

Wargaming is about negotiating power, about systems modeling, about strategy. The scenarios we provide in our games, and the possible outcomes we permit, show us the ways in which we are capable of thinking.

She starts with a couple of example which subvert chess, the oldest of all war games.

The first was designed by the artist and pacifist Yoko Ono. ‘Play It By Trust’, first developed in 1966, is played with the full complement of chess pieces on a sixty-four square board, but all the pieces are white, and so are all the squares:

Instructions for players are slim: We are to play as long as we can remember where our pieces lie. Ono’s all-white chess erases the distinction between sides and asks the player to approach the game in a different manner from a typical antagonistic stance. It is a model for play that lends itself to thoughts about commonalities, not difference.

(Yoko Ono, ‘Play It By Trust’. Installation, Contemporary Art Museum St Louis. 2011)

Another chess-based game was developed by Ruth Catlow at the turn of the century, in response to the Iraq War. ‘Rethinking Wargames: Three Player Chess,” was developed after Catlow watched the street protests in London against the war.

One player plays as the white royal pieces, a second the black royal pieces, and a third player has all of the pawns, both black and white. The imagery is clear enough:

White and black aim to annihilate each other, but the pawns place themselves at risk and attempt to stop the fighting, or at least slow down the violence, so that negotiation might take place. After five nonviolent rounds, the pawns will have won by reducing retaliatory behavior, thus allowing the battlefield to become overgrown with grasses, masking the checkerboard and effectively stopping war.

So this is a wargame where the people who are most likely to get hurt get a voice in the game. Having described these games, she pauses (given that the article is extracted from a chapter in a book about ‘proper’ wargames) to caution the reader not to be dismissive:

These are not jokes, nor annoying interferences with “real” models of war. The wargames proposed by Ono, Takako, and Catlow are legitimate conflict resolution models that engage players in new ways and should be studied by the wargaming community for modeling new approaches to old problems.

And as she says, wargames designed around these alternative models of wargaming are also ways of practising and experiencing different ways of resolving differences, and engaging wider audiences at the same time:

I have not played many wargames in which players literally stop conflict by having a moving aesthetic experience that changes their worldview. I have not played a wargame in which the public acts as a real player in the game, and surprises the powers-that-be with an effective pacifist move. Our models for wargames need to continue to evolve using unusual, creative solutions to problem solving.

She cites such an example: the work done in the 1990s in Bogota by the then mayor, Antanas Mockus Šivickas, to reduce crime and violence in the city:

He did so through rather untraditional and effective means: he hired mimes to shame drivers at certain lawless intersections, stirring dramatic prosocial behavior shifts and changing the city through these nonviolent, creative tactical performances. He asked his citizens to pay extra voluntary taxes, and, surprisingly, they did: In 2002, the city collected more than three times the revenue it had in 1990. He distributed 350,000 “thumbs up” and “thumbs down” visual cards that the public could use to peacefully show support or discourage antisocial public behavior.

Of course, these kinds of playful and co-operative strategies run completely contrary to conventional understandings of how wargames work. Because: as she says, wargaming has a player base that is overwhelmingly white and male, and tends to replicate “quintessential notions of power, domination, nationalism, binary truths, and a sense of correctness or self-righteousness”.

And of course, these notions are built on deep social and narrative structures. This means that they are hard to think beyond. At the same time, there are other social and narrative structures to draw on:

Finding and fostering alternative models for conflict resolution using social norms or aesthetics is a surprisingly difficult challenge. But ultimately, I don’t want to live in a world where there only can be victors lording over the slaughtered, defeated other.

Well, she doesn’t have to have all the answers, and these are quite powerful questions. And the set of questions which she throws out in her last paragraph are certainly worth some time:

What will wargames look like with different kinds of rules, with new expectations, with radical strategies and consensus built in? What if their simulation of conflict isn’t so much about war as it is about critical thinking and critique from an outsider status?

It sounds as if it’s worth a try.

2: What AI needs to learn from autonomous vehicles

There’s an interesting piece in IEEE Spectrum by Mary L. ‘Missy’ Cummings, who has been working on automated vehicles, on the lessons from that for AI.

She’s a critic of the way in which automated vehicles have been regulated—to the point where she’s had physical threats to her safety. But she has also been working since 2021 with the U.S. National Highway Traffic Safety Administration (NHTSA) as the senior safety advisor.

Her piece looks at the similarities between automated vehicles and AI:

People do not understand that the AI that runs vehicles—both the cars that operate in actual self-driving modes and the much larger number of cars offering advanced driving assistance systems (ADAS)—are based on the same principles as ChatGPT and other large language models (LLMs).

And what that means, of course, is that they are both pattern-seeking systems that don’t understand the context in which they are making decisions:

Both kinds of AI use statistical reasoning to guess what the next word or phrase or steering input should be, heavily weighting the calculation with recently used words or actions... Neither the AI in LLMs nor the one in autonomous cars can “understand” the situation, the context, or any unobserved factors that a person would consider in a similar situation. The difference is that while a language model may give you nonsense, a self-driving car can kill you.

Cummings identifies five lessons we can learn for AI from automated vehicles.

(Image by eschenzweig. Copyright: Common License 4.0)

1. Human errors in operation get replaced by human errors in coding

One of the things that is said about AVs is that they will be much safer than human-driven vehicles because (US data) apparently 94% of car crashes are caused by driver error. But as Cummings says, this is just the final event—discussion of “causality” isn’t right. There are many other causes, such as poor visibility or bad road design. And adding in software causes new types of complexity:

software code is incredibly error-prone, and the problem only grows as the systems become more complex.

There’s a telling list of autonomous vehicle accidents in the article, but the main point is this:

AI has not ended the role of human error in road accidents. That role has merely shifted from the end of a chain of events to the beginning—to the coding of the AI itself. Because such errors are latent, they are far harder to mitigate.

2. AI failure modes are hard to predict

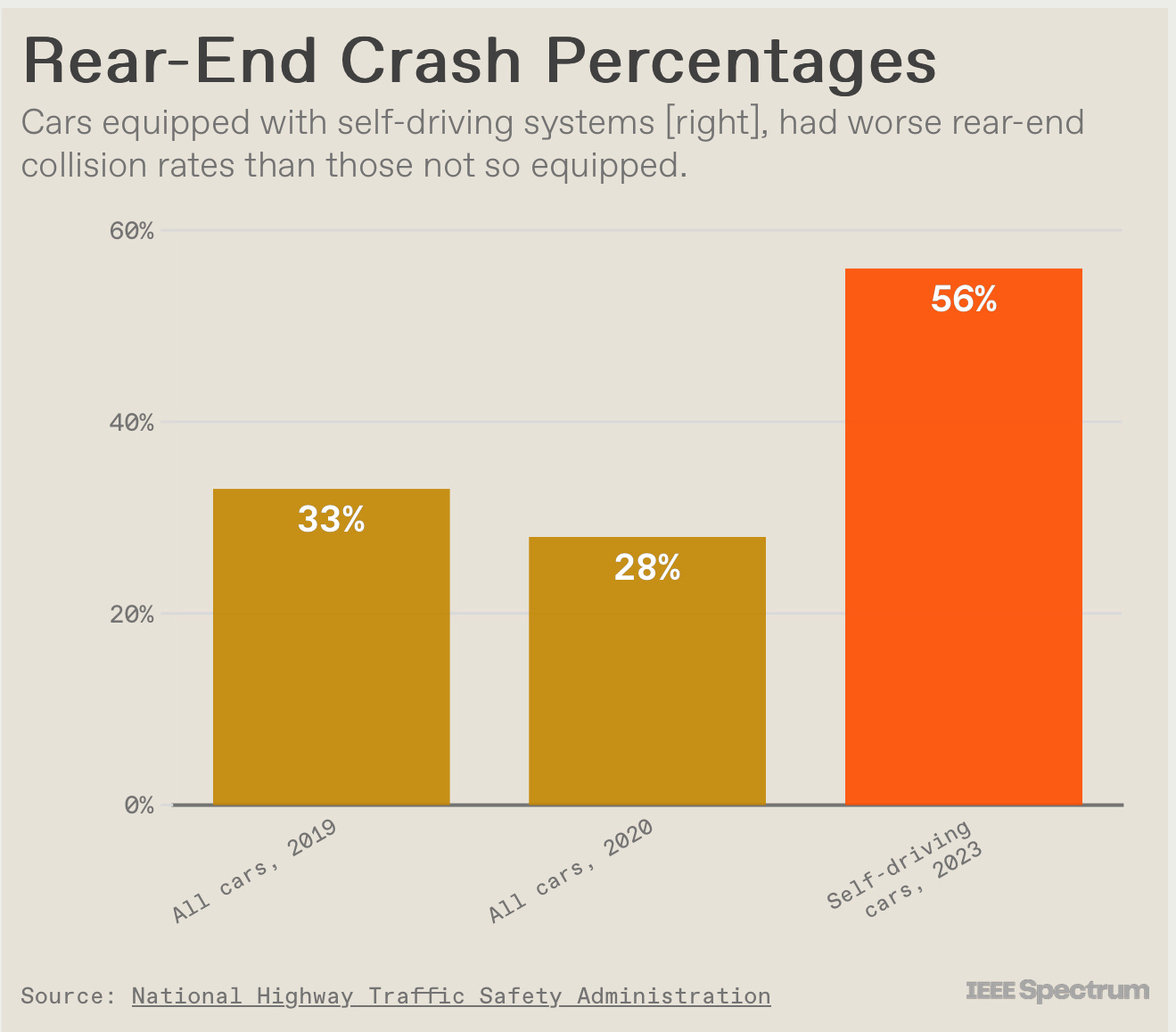

The second point is related to the first. Both AIs and autonomous vehicles work out what happens next on the basis of what they have learnt from their training sets. But because every possibility cannot be modelled in the training sets, this means that failure modes can be difficult to predict. Cars will behave differently on the same stretch of road in different weather conditions. “Phantom braking”, in which an AV will suddenly stop, leading to a rear end collision, is seen widely in vehicles from multiple manufacturers, without people being able to work out why. Sudden acceleration is also a widely reported problem:

Clearly, AI is not performing as it should. ... As other kinds of AI begin to infiltrate society, it is imperative for standards bodies and regulators to understand that AI failure modes will not follow a predictable path. They should also be wary of the car companies’ propensity to excuse away bad tech behavior and to blame humans.

3. Probabilistic estimates do not approximate judgment under uncertainty

Because AIs don’t understand context, they don’t operate well in situations where they have a lack of information, and also are unable to assess whether their estimates are good enough in such conditions.

Cruise robotaxis, for example, find it hard to operate when there are emergency vehicles in their vicinity:

These encounters have included Cruise cars traveling through active firefighting and rescue scenes and driving over downed power lines.

Other makes of robotaxi have experienced similar issues. They lack judgment under conditions of uncertainty:

(E)ven though neural networks may classify a lot of images and propose a set of actions that work in common settings, they nonetheless struggle to perform even basic operations when the world does not match their training data. The same will be true for LLMs and other forms of generative AI.

4. Maintaining AI is just as important as creating AI

A lot of the discussion of AIs seems to imply that they finish their training and then get sent off to operate. But it doesn’t work like that. They need to be updated constantly to make sure that they are informed about new situations.

Under-trained AIs experience what is known as “model drift”, a well-known problem in which the relationship between their input data and output data changes over time. Changes in settings or contexts can have the same effect: a robotaxi that is transported to a different city with different bus types will have problems detecting the buses.

Such drift affects AI working not only in transportation but in any field where new results continually change our understanding of the world. This means that large language models can’t learn a new phenomenon until it has lost the edge of its novelty and is appearing often enough to be incorporated into the dataset.

The obvious consequence for regulators is that they need to pay close attention to the maintenance regimes for AIs, to ensure that “model currency” is up to date.

5. AI has system-level implications that can’t be ignored

Self-driving cars have been designed to stop as soon as they can no longer resolve a situation they are in. This is an important safety feature, especially for passengers. But it can cause all sorts of problems for the rest of the traffic system.

Cummings writes about a Cruise robotaxi that suddenly halted during a turn, causing a crash with another vehicle. But even with causing collisions, stopped vehicles can cause large scale disruption until they are moved:

A stopped car can block roads and intersections, sometimes for hours, throttling traffic and keeping out first-response vehicles. Companies have instituted remote-monitoring centers and rapid-action teams to mitigate such congestion and confusion, but at least in San Francisco... city officials have questioned the quality of their responses.

A wireless connectivity issue can stall a whole collection of vehicles. These system failures erode public trust in the technologies:

(A)ny new technology may be expected to suffer from growing pains, but if these pains become serious enough, they will erode public trust and support. Sentiment towards self-driving cars used to be optimistic in tech-friendly San Francisco, but now it has taken a negative turn due to the sheer volume of problems the city is experiencing.

Cummings identifies some clear cross-over lessons from the autonomous vehicles sector to AI more generally, which I have paraphrased here:

Companies need to understand the broader system-level impact of AI, but they also need oversight

Regulators need to define reasonable operating boundaries for systems that use AI, and use these as a basis for regulation

Regulators should respond to evidence of clear safety risks by setting limits, rather than deferring to industry.

The more I read of this stuff, the more likely I think it is that we’ll end up with regulatory regimes around AI that are like regimes for drugs or food: that companies will have to prove safety before they can deploy the product or service. Of course, we may need a crisis of some kind first, to help us to get there.

Elsewhere: Learning

My son Peter Curry has the second part of his review essay of the book How We Learn at his Substack. There’s lots of interesting things in here about how we learn to speak and how we use numbers. Here’s an extract:

By the time of your first birthday, your ability to distinguish phonemes is essentially locked in place. The blocks of sound that make up your native language(s) locked in, and it takes immense effort to undo the ossification of these phonemes. You will have heard the effects of this when other people speak English.

If you’re one of those people who can’t read Part II unless you’ve already Part I, well, the first part of the review essay is here.

j2t#483

If you are enjoying Just Two Things, please do send it on to a friend or colleague.