Welcome to Just Two Things, which I try to publish daily, five days a week. (For the next few weeks this might be four days a week while I do a course: we’ll see how it goes). Some links may also appear on my blog from time to time. Links to the main articles are in cross-heads as well as the story.

#1: The lure of ‘exponential’

I usually like Laetitia Vitaud’s writing on work, which combines a humane regard for people with a scepticism about large organisations and large tech. But there’s a line that jarred in her latest newsletter, which introduces a podcast with Azeem Azhar on his new book Exponential:

The gap between the way these (public) services are delivered and the technologies we use on a daily basis is wider than it’s ever been. Though it’s likely to be worse in Germany than in many other places, there’s actually such a gap everywhere. Technologies continue to grow exponentially whereas our public services, traditional organisations, legal categories and social norms change much more slowly, in a linear fashion. (Her emphasis).

Obviously this is the thesis of the Azhar’s book, and in the newsletter extracts from the podcast we get the usual ‘exponential’ tropes: for example, the ‘wheat and chessboard’ story, wheeled out to demonstrate that our brains aren’t equipped to comprehend exponential growth. (While checking this just now, I discovered that this story dates from 1256).

So maybe it’s worth interrogating the ‘exponential’ story and its limits. Here’s some quick notes.

First, this is a Silicon Valley story, driven by the fact that over quite a long period of time Moore’s ‘law’ anticipated that the number of transistors on a microchip doubled every year. And so it did—it was even baked into the industry roadmap. So people like the Singularity Hub imagined what would happen until At least, it did until it stopped doing it.

And for chips, there are some strong negative exponentials at work: for example, the cost of the fabrication plant for each new generation of chips doubles each time around—that gets pretty expensive pretty quickly.

Second, people imagine that—maybe because this is a ‘Silicon Valley is different’ story—that this exponential rule on technology improvement is specific to digital technologies. It’s not.

Research by the Santa Fe Institute found that Moore’s ‘Law’ was a version of ‘Wright’s Law’, developed in 1936 when microchips were the stuff of science fiction. The doubling effect is a function of economies of scale and scope (learning). There comes a point when you run out of scale.

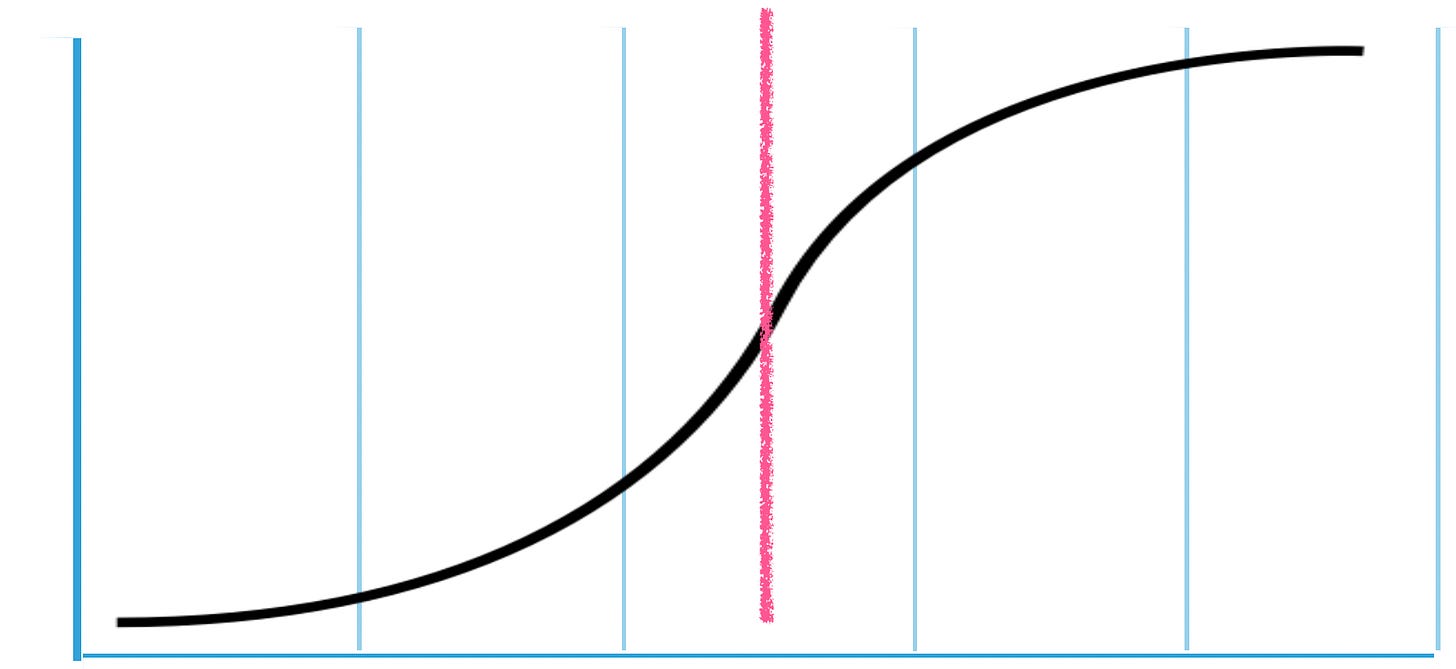

Third, and building on this, most technologies, like most systems, follow an S-curve pattern, accelerating towards a mid-point inflection and then slowing down. The reasons for that inflection point aren’t just to do with market capacity. The other thing that happens when technology applications accelerate rapidly is that they start to generate externalities, and these act as a brake on continuing accelerating growth. I wrote something about this in a piece reflecting on Alvin Toffler’s Future Shock, one of the 20th century ur-texts on the idea that change was going too fast for us to cope with:

S-curves or logistics curves, one of the basic building blocks of much futures thinking, show an acceleration in the first part of the S-curve, and then a deceleration in the second part. Much thinking that writes about exponential change, as Toffler does (in Future Shock) without using the word, imagines that the rapid acceleration seen in the second quartile of the S-curve, when growth is at its fastest, is a new normal, rather than a system that is about to start meeting its limits.

And if we look at the S-curve of technology and Vitaud’s ‘linear’ change in other social categories, we find that, in fact, regulation catches up. This is the reason why the question of how to manage our monopolistic tech giants is suddenly a live discussion.

The final note is a reflection on Stewart Brand’s ‘pace layers’ model, published in his book ‘The Clock of the Long Now’. The pace layers model looks like this:

(Source: Stewart Brand: The Clock of the Long Now)

Brand explains the difference between the top three layers (the fast layers, where tech also lives) and the bottom, ‘slow’, layers, like this:

Fast learns, slow remembers. Fast proposes, slow disposes. Fast is discontinuous, slow is continuous. Fast and small instructs slow and big by accrued innovation and by occasional revolution. Slow and big controls small and fast by constraint and constancy. Fast gets all our attention, slow has all the power. All durable dynamic systems have this sort of structure. It is what makes them adaptable and robust.

Re-reading his article on this recently before I did some training, I was struck by a section in his final paragraph:

The division of powers among the layers of civilization lets us relax about a few of our worries. We don't have to deplore technology and business changing rapidly while government controls, cultural mores, and "wisdom" change slowly. That's their job. Also, we don't have to fear destabilizing positive-feedback loops (such as the Singularity) crashing the whole system. Such disruption can usually be isolated and absorbed. The total effect of the pace layers is that they provide a many-leveled corrective, stabilizing feedback throughout the system.

So why does the idea of ‘exponential’ continue to have such a hold? I have a benign version and a less benign version. The benign version is that humans are susceptible to the idea that ‘this time is different’. There’s a reason why ‘The Emperor’s New Clothes’ is such a compelling story. The less benign version: ‘exponential’ is an effective sales tool for consultants and technologists alike.

'Wire-heading’ was a new word to me. It is used by AI researchers to describe the phenomenon of AIs repeatedly doing things that give them a reward rather than completing their tasks:

(I)n 2016, a pair of artificial intelligence (AI) researchers were training an AI to play video games. The goal of one game – Coastrunner – was to complete a racetrack. But the AI player was rewarded for picking up collectable items along the track. When the program was run, they witnessed something strange. The AI found a way to skid in an unending circle, picking up an unlimited cycle of collectables. It did this, incessantly, instead of completing the course.

The article, in The Conversation, comes with a video of AIs behaving in this unexpected way:

It’s a long piece, and I’m not going to share much of it here, but the reason we need to think about this issue—apart from the fact that it is clearly intrinsically interesting— is that it could create real risks in AI-based systems:

When people think about how AI might “go wrong”, most probably picture something along the lines of malevolent computers trying to cause harm. After all, we tend to anthropomorphise – think that nonhuman systems will behave in ways identical to humans. But when we look to concrete problems in present-day AI systems, we see other — stranger — ways that things could go wrong with smarter machines. One growing issue with real-world AIs is the problem of wireheading.

How might this work in practice? They give an example based on a robot that is being trained to clean a kitchen:

Imagine you want to train a robot to keep your kitchen clean. You want it to act adaptively, so that it doesn’t need supervision… So, you encode it with a simple motivational rule: it receives reward from the amount of cleaning-fluid used. Seems foolproof enough. But you return to find the robot pouring fluid, wastefully, down the sink. Perhaps it is so bent on maximising its fluid quota that it sets aside other concerns: such as its own, or your, safety. This is wireheading — though the same glitch is also called “reward hacking” or “specification gaming”.

As they say, ‘the pursuit of reward becomes its own end’. It happens that we see similar behaviours in lab rats—and also in addicted humans. And much of the piece also explores these behaviours. In fact it gets pretty expansive towards the end; aliens get a mention, for example, as do the 20th century scientists Julian Huxley and JBS Haldane. They also speculate as to what a highly intelligent ‘super-junkie’ AI might do if it discovered that mere humans were blocking its source of reward.

But closer to the present:

Speculative and worst-case scenarios aside, the example we started with – of the racetrack AI and reward loop – reveals that the basic issue is already a real-world problem in artificial systems. We should hope, then, that we’ll learn much more about these pitfalls of motivation, and how to avoid them, before things develop too far.

(h/t John Naughton, Memex 1.1)

Notes from readers: The cars and aliens theme seems to be a gift that keeps on giving. After yesterday’s New Yorker cartoon, reader Nick Wray sent me a satirical cartoon (9 minutes) from 1966 that purports to be made by the ‘National Film Board of Mars’ (but actually by the National Film Board of Canada) that reports that Martians have found intelligent life on earth. Spoilers: but the intelligent life isn’t human. The directors are Les Drew and Kaj Pindal.

https://www.nfb.ca/film/what_on_earth/

j2t#178

If you are enjoying Just Two Things, please do send it on to a friend or colleague.