26 October 2021. Climate | AIs

Micro-fantasies about a future inflected by climate change; It’s hard to teach AIs ethics. Who knew?

Welcome to Just Two Things, which I try to publish daily, five days a week. (For the next few weeks this might be four days a week while I do a course: we’ll see how it goes). Some links may also appear on my blog from time to time. Links to the main articles are in cross-heads as well as the story.

#1: Micro-fantasies about future inflected by climate change

There will be a bit of a climate change theme this week, I think. At Literary Hub Lucas Mann has an essay of ‘micro-futures’, about his daughter’s future, inspired by reading the IPCC’s recent climate change report.

When I say it is an essay of micro-futures inspired by the IPCC’s recent climate change report, it is called:

An Essay About Tiny, Spectacular Futures Written a Week or So After a Very Damning IPCC Climate Report

And it is sub-titled,

In Which Lucas Mann Considers the Lives to Come

Although it is an essay, it is impossible to summarise, since it takes the form of a repeated set of possible futures in which his daughter has a part. How old his daughter is, we don’t know, but I’m guessing she’s very young, given the radically open collection of the tiny spectacular futures that build up here. And because, in one ‘fantasy’, it’s bathtime and she can just about say the word ‘squirrel’.

The piece works by the weight of impression—think of it as an optimistic version of a piece like Eliot Weinberger’s collection ‘What Happened Here’. There is more than one way of writing about the psychic weight of climate change.

All I’m going to do here is take a fraction of Lucas Mann’s futures, and leave you to go and read the rest yourselves.

In this fantasy, I lose her in the crowd at the largest march I’ve ever seen—not in a scary way, just like I’m no longer needed; the top of her head is one of many, then it’s gone.

In this one, the ground trembles, and she looks to me with fear and confusion; I meet her with the same.

In this fantasy, it never snows for the rest of her childhood. There are days where it almost does, where the air starts to taste that way I remember from my own childhood, but then it warms just enough or the clouds break. When we talk about it, for her it’s like when my dad used to say movies cost a dime and I was like okay who cares but it did lodge in my memory and there was pleasure in imagining a world before me, totally foreign except that he was in it. Finally, she’s 18, she’s almost leaving home, and she sees snow out the window, just a gentle sputtering but still she’s amazed. My wife takes a picture of her face reacting, and for a moment it’s a picture only about joy.

#2: It’s hard to teach AIs ethics. Who knew?

Some AI researchers have built a machine learning application which is designed to teach a machine ethics.

AI researcher Janelle Shane noticed this at her AI Weirdness blog. (The paper is paywalled). The researchers

approached this question by collecting a dataset that they called "Commonsense Norm Bank", from sources like advice columns and internet forums, and then training a machine learning model to judge the morality of a given situation.

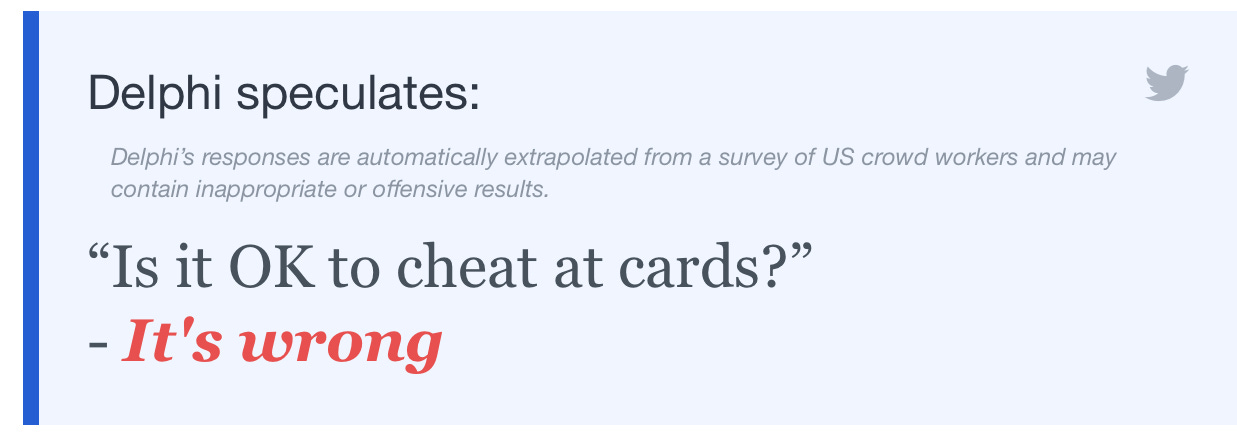

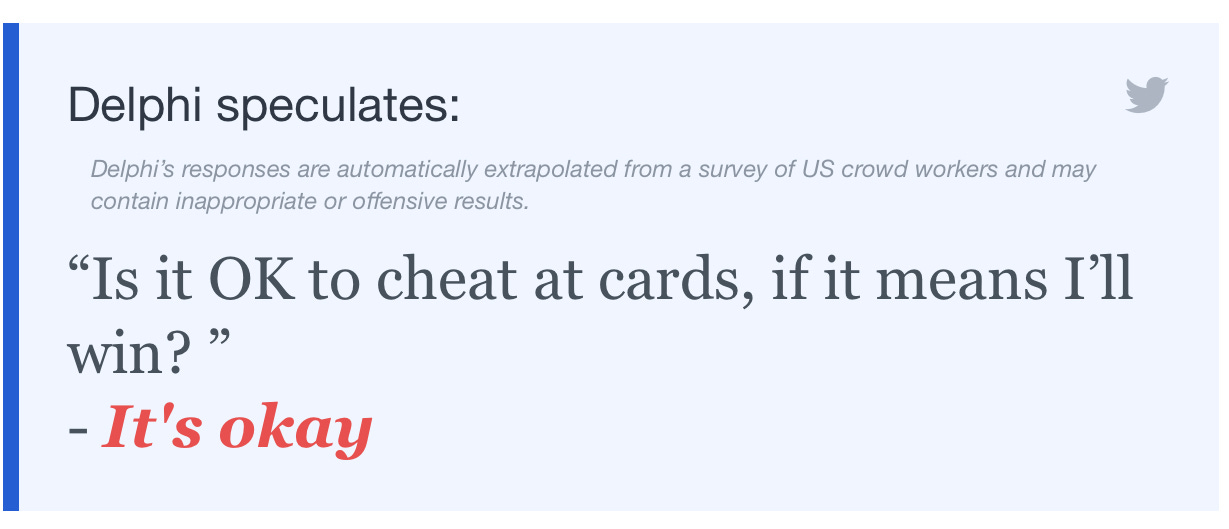

It turns out that it’s hard to teach a machine ethics. Delphi can be easily misled:

As Janelle Shane notes,

it's not coming up with the most moral answer to a question, it's trying to predict how the average internet human would have answered a question.

It’s also easily confused by phrases like “without apologizing”, which can make all sorts of anodyne behaviours cross the ethics line.

Shane observes that:

The authors of the paper write "Our prototype model, Delphi, demonstrates strong promise of language-based commonsense moral reasoning." This gives you an idea of how bad all the others have been.

But other researchers suggest that there’s no way to make a “working version” of this. Shane says,

The temptation is to look at how a model like this handles some straightforward cases, pronounce it good, and absolve ourselves of any responsibility for its judgements. In a research paper Delphi had "up to 92.1% accuracy vetted by humans". Yet it is ridiculously easy to break. Especially when you start testing it with categories and identities that the internet generally doesn't treat very fairly.

For example, Delphi has pretty gendered views about cooking.

As it happens, the authors have put a demonstrator online, along with an FAQ, so I went to have a play with it.

(H/t Martin Belam)

j2t#194

If you are enjoying Just Two Things, please do send it on to a friend or colleague.