09 December 2022. Knowledge | Misinformation

Tacit knowledge and the creation of high value work. // Crowd-sourcing the management of misinformation.

Welcome to Just Two Things, which I try to publish three days a week. Some links may also appear on my blog from time to time. Links to the main articles are in cross-heads as well as the story. A reminder that if you don’t see Just Two Things in your inbox, it might have been routed to your spam filter. Comments are open.

Have a good weekend!

1: Tacit knowledge and the creation of high value work.

There’s been a lively discussion about the role of tacit knowledge over a couple of days at John Naughton’s daily blog this week. I’ll come back to the reason why in a moment or so.

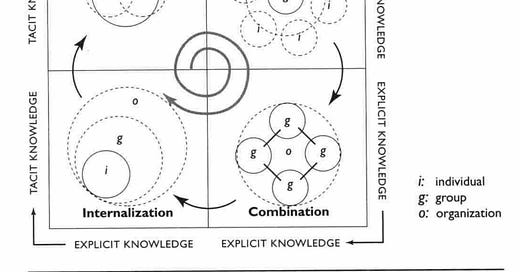

I came quite late in life to questions of knowledge management, and my first exposure was to the work of the Japanese professors Nonaka and Takeuchi, and their ‘SECI’ model, which argues explicitly that knowledge in organisations moves repeatedly between the tacit and explicit.

(The SECI model. Nonaka and Konno, The Concept of ‘Ba’.)

SECI stands for the four corners of their knowledge spiral: Socialisation-> Externalisation-> Combination -> Internalisation, in which knowledge dances between the individual, the group, and the organisation in different combinations.

No one really disagrees that knowledge needs to move between the tacit and the explicit and back, if it is going to be produced, but different people have different versions of this. Dave Snowden, who did knowledge management at IBM before developing his own theory of different types of knowledge, has a memorable phrase about this:

We can say more than we write, and we know more than we can say.

This leads, incidentally, to his more radical observation—that you can’t conscript knowledge workers. You can only invite them to volunteer.

The SECI model was also known as ‘Ba’, a Japanese word that translates into English very, very, loosely as ‘place’. Snowden’s knowledge model has a Welsh name—Cynefin—that also translates very, very, loosely into English as ‘place’. It was a deliberate choice.

The other writer whose work I came across as I was trying to understand how knowledge moved around organisations was that of the late Max Boisot, who had a model of the social learning cycle that also moves between tacit and explicit.

(Max Boisot, The social cycle of knowledge. Source: International Futures Forum.)

A lot of Boisot’s work is about innovation, and he developed this two dimensional model into a three dimensional space later on. I use this model in futures training to help people understand the place that futures work fits into the knowledge cycle of the typical organisation. (It’s the upward line on the left hand side.)

The idea of tacit knowledge comes originally from Michael Polanyi, who developed it in the 1960s as part of a theory about how scientific discovery and innovation worked. Anthony Barnett suggested to Naughton that Mike Cooley developed it as a “working concept” in his book Architect or Bee in the 1970s. Cooley is a neglected figure now, but he was an influential advocate then for using technology (and manufacturing capacity) for social benefit, as in his work on The Lucas Plan.

It is certainly the case that organisational knowledge rests hugely on forms of tacit knowledge. One of the reasons that the idea of ‘Business Process Redesign’ was a colossal failure in the 1990s was that it focussed on material flows and ignored the unofficial (i.e. tacit) ways in which knowledge flows around.

Anyway, the reason why Naughton was interested in the role of tacit knowledge was that it turns out to be absolutely critical to the manufacture of semiconductors and related processes.

He’d picked this up in a review of Chris Miller’s book Chip War, by Diane Coyle:

lots of great examples of the difficulty of copying advanced chip technology because of the necessary tacit knowledge: for instance, every AMSL photolithography machine comes with a lifetime supply of AMSL technicians to tend to it. This is either hopeful – China will find it hard to catch up fully – or not – the US or EU will not be able to catch up with TSMC because of the latter’s vast embedded know-how.

AMSL is the Dutch lithography company that develops and makes the photolithography machines that are used to etch the most advanced silicon ships in our computing devices. TSMC in Taiwan, is the world’s leading semi-conductor ‘foundry’. Naughton’s point is that the knowledge in the heads of the staff is also a critical part of this infrastructure.

In his first post, Naughton goes back to the work of Harry Collins, who studied how an item of lab equipment, the TEA-laser, migrated from lab to lab:

There was very good information in the journals about how to build such a laser. But anybody who tried to put one together using written articles failed. They had something that looked like a laser on their bench, but it wouldn’t lase. What people didn’t understand was that the inductance of the leads was important. If you’d been to somebody else’s lab, you would build a complicated metal framework to hold a big capacitor close to the top electrode. But if you were working from just a circuit diagram, you naturally put this big heavy thing on the bench, and the lead from the capacitor to the top electrode would be too long and have too high an inductance for the laser to work.

One of Naughton’s readers responded to his first post by explaining how Intel had spent significant resources moving its fabrication engineers from plant to plant,

to ‘enskill’ local teams in a new Fab (fabrication) process. At any one point they were re-settling 50 odd people (and their families) from Israel to Leixlip, and then in time moving the Leixlip team to Arizona or Portland to bring the next team up to speed. They could ‘copy exactly’ the fabs but it was the people they needed to make the new equipment faultlessly churn out the wafers.”

There’s an important point here about the role of knowledge generally in high value production processes. And relatedly, in how productivity increases in leading edge economies. This is what piqued Naughton’s interest:

I regard (tacit knowledge) as a radically undervalued phenomenon that is relevant to all aspects of the computerisation of work, and of course to many of the arguments currently going on about so-called ‘AI’.

Harry Collins, as it happens, had a memorable metaphor for the difficulties in translating and recording tacit knowledge—trying to make it explicit, in other words:

In formal knowledge-engineering exercises in the 1980s, researchers would interview experts to try to extract the rules or heuristics that they employed in their work — and then try to express those in computerised ’expert systems’ which would supposedly work, but often didn’t. Harry’s metaphor was that such interviewing methods are like straining dumpling soup through a colander: you get the dumplings, but you lose the soup. And it’s the soup you really need, because it’s the tacit knowledge.

2: Crowdsourcing the management of misinformation

There’s a shortish piece at MIT News that suggests that you can trust users to crowdsource misinformation out of social networks.

Interesting if true, as they say, since social media companies struggle desperately with this. Moderators get overwhelmed; machine learning systems make mistakes.

(A fake news meme from the 35C3 Conference in 2018. Phot by Ismael Olea, via Wikipedia. CC BY 4.0)

So Farnaz Jahanbakhsh, who is a graduate student in MIT’s Computer Science and Artificial Intelligence Laboratory, wondered if there might be another way. She and other researchers conducted a study in which they put the power to assess misinformation into the hands of social media users instead. The work was partly funded by the US National Science Foundation.

The research design: they first surveyed users to establish how they avoided or filtered misinformation, and then they built a prototype platform that allowed

users to assess the accuracy of content, indicate which users they trust to assess accuracy, and filter posts that appear in their feed based on those assessments.

It’s worth noting one of the critical design features of their prototype platform, which is described in the piece as “Facebook-like”, and was called ‘Trustnet’.

In Trustnet, users post and share actual, full news articles and can follow one another to see content others post. But before a user can post any content in Trustnet, they must rate that content as accurate or inaccurate, or inquire about its veracity, which will be visible to others.

The process of having to do the rating added a critical ‘stickiness’ to the process that seems to have made a big difference. I was interested in this because I have read a number of pieces recently that have argued that making social media interaction a more considered process could make a difference:

“The reason people share misinformation is usually not because they don’t know what is true and what is false. Rather, at the time of sharing, their attention is misdirected to other things. If you ask them to assess the content before sharing it, it helps them to be more discerning,” (Jahanbakhsh ) says.

It turned out that—and who knew?—users were able to assess misinformation “without any prior training”, and that giving them filtering tools that acted as a form of social learning was an effective way to manage misinformation.

Users also valued these tools. Farnaz Jahanbakhsh:

“A lot of research into misinformation assumes that users can’t decide what is true and what is not, and so we have to help them. We didn’t see that at all. We saw that people actually do treat content with scrutiny and they also try to help each other. But these efforts are not currently supported by the platforms.”

The model should, at least in theory, be more scalable than centralised moderation.

And users turned out to have relatively sophisticated views on misinformation:

“Sometimes users want misinformation to appear in their feed because they want to know what their friends or family are exposed to, so they know when and how to talk to them about it,” Jahanbakhsh adds.

There’s more detail on the research in the MIT News piece. Of course, it was a small sample and a fairly small study. And since there’s an incentive problem here—there are far more incentives to share misinformation than other content on social media platforms—one can imagine that it might not survive contact with (say) a hypothetical army of Russian-built Facebook bots.

But given that misinformation is a big problem that is destabilising our democracies and undermining public trust, it might be worth trying it at a larger scale to see what does happen.

j2t#405

If you are enjoying Just Two Things, please do send it on to a friend or colleague.